WRF dynamical core for LES/mesoscale simulations

Contents

- 1 Introduction

- 2 Studies with PCM+WRF

- 3 WRF equations

- 4 Installing & Compiling the Generic PCM + WRF

- 4.1 3 git repositories to handle

- 4.2 .env file

- 4.3 Download child repositories

- 4.4 Modify options to fit your compiling environment & your modelled planetary atmosphere

- 4.5 Terrestrial WRF

- 4.6 Put the 3 repositories on the right branch/commit

- 4.7 Execute installing and compiling files

- 4.8 Executables

- 4.9 Some tricks if it does not work

- 5 Running a simulation

- 6 Remarks

Introduction

WRF is a mesoscale numerical weather prediction system designed for both atmospheric research and operational forecasting applications over the Earth's atmosphere: see WRF presentation.

In the PCM, we take advantage of the dynamical core of the WRF model to run LES (large-eddy simulations) or mesoscale simulations.

To do so, we take the WRF model, remove all its physical packages related to the Earth's atmosphere, and plug in the physical package from the PCM (either Venus, Mars, Titan, or Generic).

A description of the various equations solved by WRF can be found here: Skamarock et al. (2019, 2021).

Studies with PCM+WRF

Descriptions of the application of WRF dynamical core with the PCM can be found in:

- Noé CLEMENT's PhD thesis (chapter 3) for the Generic PCM.

- Maxence Lefevre's PhD thesis for the Venus PCM.

- Aymeric Spiga's PhD thesis for the Mars PCM

Studies with the PCM coupled to the WRF dynamical core have been done using WRF V2, WRF V3, WRF V4.

Our aim is now to use WRF V4 as much as possible, which offers significant improvements in terms of numerical schemes in particular.

Here is a list of studies using the PCM coupled to the WRF dynamical core:

| Planet | Reference | Physical package | Dynamical core |

|---|---|---|---|

| Mars | Spiga and Forget (2009) | Mars PCM | WRF V2 |

| Mars | Spiga et al. (2010) | Mars PCM | WRF V3 |

| Venus | Lefèvre et al. (2018) | Venus PCM | WRF V3 |

| Venus | Lefèvre et al. (2020) | Venus PCM | WRF V2 |

| Exoplanets | Lefèvre et al. (2021) | Generic PCM | WRF V3 |

| Exoplanets | Leconte et al. (2024) | Generic PCM | WRF V4 |

| Uranus, Neptune | Clément et al. (2024) | Generic PCM | WRF V4 |

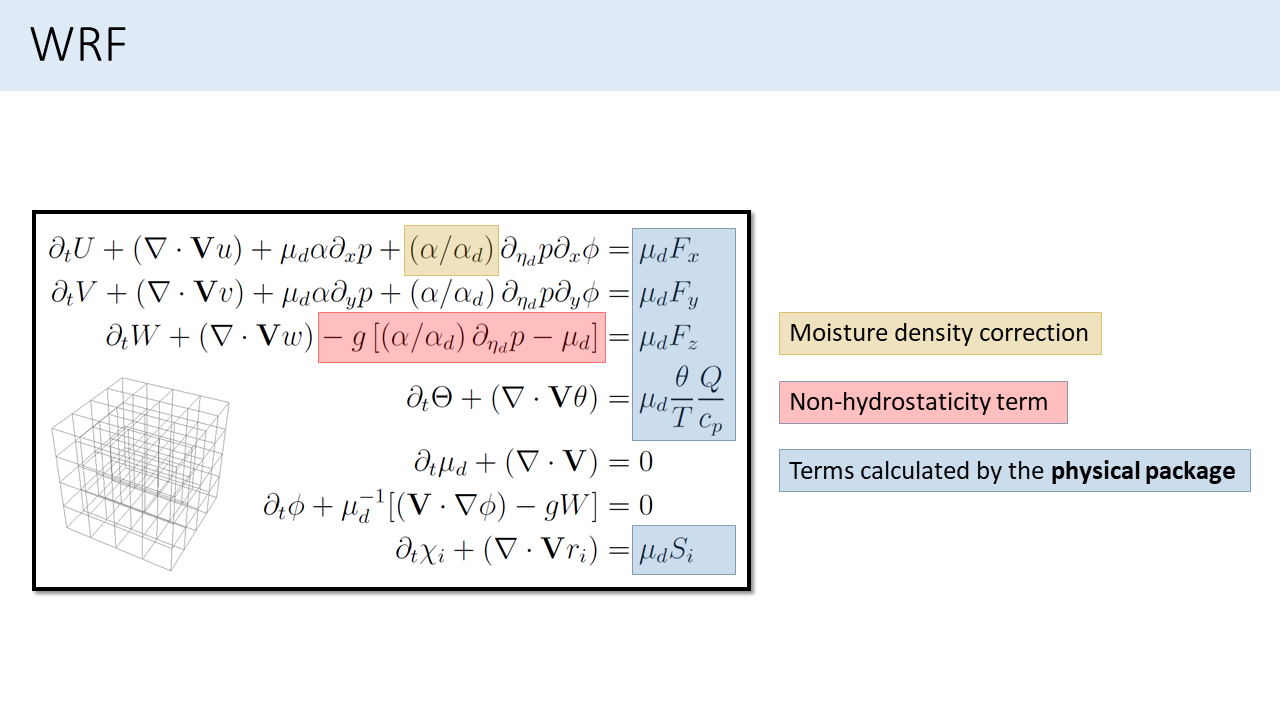

WRF equations

Below is a synthesis of equations solved by WRF.

Installing & Compiling the Generic PCM + WRF

3 git repositories to handle

Review of the repositories

- LES_planet_workflow

- git-trunk (physics & GCM dynamical core), which takes the name "code" when being cloned

- WRFV4 (dynamics)

The first is hosted on Aymeric Spiga's git, and will download (git clone) the two others, which are part of La communauté des modèles atmosphériques planétaires repositories.

The 3 repositories are managed by git. You will need to do a little juggling between these repositories to be sure they are on the right branches for your project (see later).

Downloading/Cloning the parent repository

The first step is downloading (i.e. cloning) the parent repository 'LES_planet_workflow' from Aymeric Spiga's git (you will download the other two later).

Put this repo on the branch "wrfv4".

.env file

!!! For now, only the Intel compiler has been tested and works. !!!

On any cluster, one has to build a file "mesoscale.env" which should look more or less like:

# mesoscale.env file

source YOUR_ifort_ENV

# for WRFV4

declare -x WRFIO_NCD_LARGE_FILE_SUPPORT=1

declare -x NETCDF=`nf-config --prefix`

declare -x NCDFLIB=$NETCDF/lib

declare -x NCDFINC=$NETCDF/include

# PCM/WRFV4 interface

declare -x LMDZ_LIBO='code/LMDZ.COMMON/libo/YOUR_ARCH_NAME'

On ADASTRA, the following one works:

# mesoscale.env file

source code/LMDZ.COMMON/arch/arch-ADASTRA-ifort.env

# for WRFV4

declare -x WRFIO_NCD_LARGE_FILE_SUPPORT=1

declare -x NETCDF=$NETCDF_DIR

# alternatively: declare -x NETCDF=`nf-config --prefix`

declare -x NCDFLIB=$NETCDF/lib

declare -x NCDFINC=$NETCDF/include

# specific to ADASTRA

declare -x NETCDF_classic=1

# fix for "undefined symbol: __libm_feature_flag" errors at runtime:

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$NETCDF_DIR/lib

# PCM/WRFV4 interface

declare -x LMDZ_LIBO=code/LMDZ.COMMON/libo/ADASTRA-ifort

This file should be sourced before performing compilation steps. Ideally, it will be integrated automatically (no need to source manually). It should also be sourced before executing the compiled files to run simulations.

If you work on Adastra, you can directly use the one above by inserting it in the LES_planet_workflow, and calling it "mesoscale.env". It will work for both the WRF terrestrial and the Generic PCM+WRF

Download child repositories

In 'LES_planet_workflow', execute the 1_downloading.sh file to download (i.e. clone) the 'git-trunk' repository (which will be named 'code').

Download (i.e. clone) the WRFV4 repository in the 'LES_planet_workflow/code/WRF.COMMON' directory (keep the name "WRFV4")

Now you have the 3 repositories!

Modify options to fit your compiling environment & your modelled planetary atmosphere

In 'LES_planet_workflow', modify the 0_defining.sh file to fit your Intel environment

archname="ADASTRA-ifort" # (or other cluster)

Modify the 0_defining.sh file to have the right physics compiling options, for example:

add="-t 1 -b 20x34 -d 25" # Uranus & Neptune with CH4 visible bands (compilation options are maybe depreciated)

Terrestrial WRF

Compiling terrestrial WRF is a good first step to check if the environment is working.

Somewhere else in your work directory, do:

"git clone --recurse-submodules https://github.com/wrf-model/WRF"

Before executing the following commands, you have to source the "mesoscale.env" file:

source mesoscale.env

Commands:

./configure

During the configure step, you will be asked about the compiler, choose "INTEL (ifort/icc) dmpar" (probably number 15), and then about nesting, choose "basic" (=1).

then

./compile em_les

WRF compilation can take some time. Consider doing:

nohup ./compile em_les &

Final built executables are: "ideal.exe" and "wrf.exe" (in the WRF/main directory).

When compiling terrestrial WRF, if you indeed selected 15 and 1, you do not have to modify the following. Otherwise, in the 0_defining.sh file at lines:

echo 15 > configure_inputs echo 1 >> configure_inputs

Replace 15 & 1 with the numbers/options you selected.

Put the 3 repositories on the right branch/commit

- On branch "wrfv4" for LES_planet_workflow repo (already done normally)

- On a branch that matches your physics developments on the git-trunk (=code) repo.

The branch "hackathon_wrfv4" works for Uranus/Neptune test cases.

This commit shows you what to modify if you want to work with another tracer than water (e.g., CH4).

See this commit to fix a little bug with the slab ocean model. (!!! For developers, this has to be corrected and put in the master branch !!!)

- On branch "generic" for WRFV4 workflow

Execute installing and compiling files

One by one:

- 2_preparing.sh

- 3_compiling_physics.sh

- 4_compiling_dynamics.sh

Good luck!

Executables

If you succeed in compiling the model, executables should be in the 'LES_planet_workflow/code/WRF.COMMON/WRFV4/main' directory. Executables of the Generic PCM+WRF have the same name as terrestrial WRF.

"ideal.exe" prepares the domain. "wrf.exe" runs the simulation.

The modifications we make in these files during compilation aim to incorporate the Physics of the PCM as a library, as well as the interface between WRF and the PCM.

Some tricks if it does not work

In case of a failed compilation, the command

./clean -a

in the WRFV4 directory allows you to remove intermediate compiled files and properly restart the compilation.

Running a simulation

Initializing a simulation

'input_sounding' file

This file has a header with 3 parameters, followed by 5 columns

The header contains: the bottom pressure (in mbar), the bottom temperature (K), and "0.0" For example, for Neptune: 10000.0 166.6004857302868 0.0

5 columns are profiles for: equivalent altitude, potential temperature, vapor, ice, and X (?)

You need to run a 1D simulation and use the diagfi.nc file to initialize fields for the input_sounding exo_k will help you!

'planet_constant' file

gravity (m/s2)

heat capacity (J/kg/K)

molar mass (g/mol)

reference temperature (K)

bottom pressure (Pa)

radius (m)

For example, for Neptune:

11.15

10200.

2.3

175.

10.e5

24622.0e3

'input_coord' file

WRF Settings: 'namelist.input' file

'namelist.input' file

batch file for slurm

#! /bin/bash

#SBATCH --job-name=batch_adastra

#SBATCH --account=cin0391

#SBATCH --constraint=GENOA

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=20

#SBATCH --threads-per-core=1 # --hint=nomultithread

#SBATCH --output=batch_adastra.out

#SBATCH --time=06:00:00

source ../mesoscale.env

srun --cpu-bind=threads --label ideal.exe

srun --cpu-bind=threads --label wrf.exe

mkdir wrfout_results_$SLURM_JOB_ID

mv wrfout_d01* wrfout_results_$SLURM_JOB_ID

mv wrfrst* wrfout_results_$SLURM_JOB_ID

cp -rf namelist.input wrfout_results_$SLURM_JOB_ID/used_namelist.input

cp -rf callphys.def wrfout_results_$SLURM_JOB_ID/used_callphys.def

cp input_sounding wrfout_results_$SLURM_JOB_ID/used_input_sounding

mkdir wrfout_results_$SLURM_JOB_ID/messages_simulations

mv batch_adastra.out wrfout_results_$SLURM_JOB_ID/messages_simulations

mv rsl.* wrfout_results_$SLURM_JOB_ID/messages_simulations

Files in the folder

To launch the simulation:

- batch_adastra

WRF specific:

- planet_constant

- input_sounding

- input_coord

- namelist.input

Generic PCM specific:

- run.def

- callphys.def

- gases.def

- traceur.def

Executables:

- ideal.exe

- wrf.exe

The run.def file is required even if unused (no need to change it).

Optional for additional outputs

- my_iofields_list.txt

with an additional line in namelist.input

iofields_filename = "my_iofields_list.txt"

+:h:0:DQVAP,DQICE,WW,TT -:h:0:QH2O

Remarks

For advanced users:

When compiling the model, the flags:

#ifndef MESOSCALE

use ...

#else

use ...

select lines compiled depending on the configuration.